Financial fraud is escalating worldwide.

The global cost of fraud and cybercrime is projected to hit $10.5 trillion this year, with the financial services industry expected to be a prime target.

The 250% jump from an annual loss of $3 trillion a decade ago has been driven largely by generative AI, according to a recent report by the World Economic Forum, referencing data from research firm Cybersecurity Ventures.

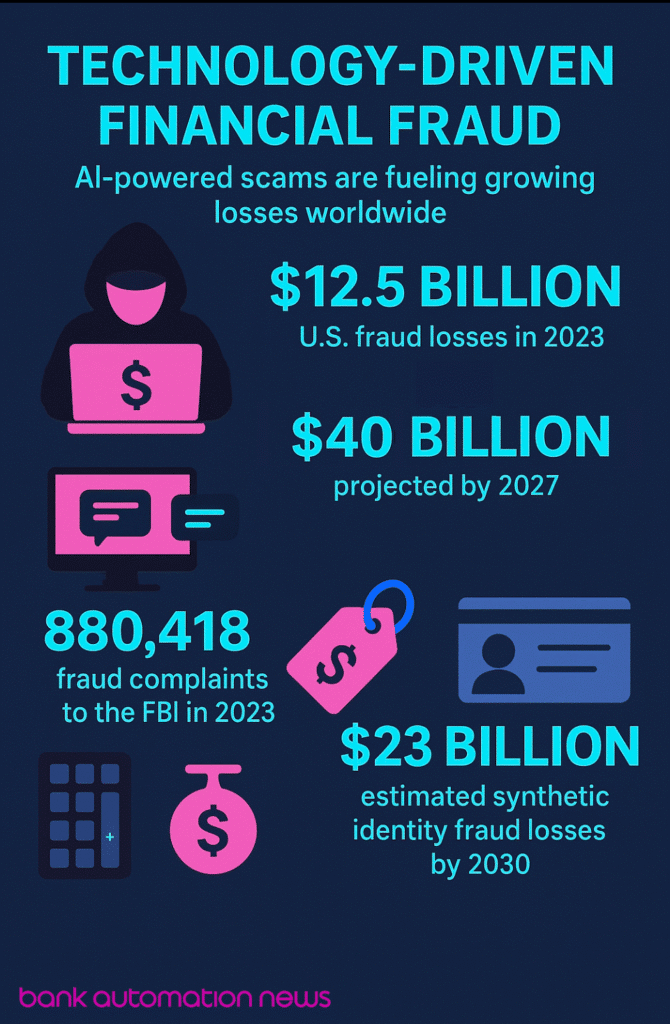

Fraud loss in the United States was $12.5 billion in 2023 and is projected to rise at a compound annual growth rate of 32% as AI tools become increasingly accessible, Satish Lalchand, principal of Deloitte Transactions and Business Analytics, told Bank Automation News, an Auto Finance News sister publication.

In fact, generative AI could enable a surge in fraud losses in the U.S. to $40 billion by 2027, prompting calls for tighter vigilance and faster responses from financial institutions, Lalchand said.

Last year’s losses were up 33% from 2023 to $16.6 billion, according to the FBI’s Annual Internet Crime report, released on April 23.

The FBI also received a record of more than 880,000 complaints in 2023, with the highest number and greatest losses coming from California, Florida, Texas and New York, an FBI spokesperson told BAN. Complaints dropped 2.3% in 2024 to 859,532, but remained above 836,000, which has been the average since 2020, according to the report.

AI is the culprit, sparking a surge in fraud losses since 2020, with global fraud actors now targeting U.S. victims, the FBI spokesperson said.

“In the last five years, there has been a shift of fraud actors targeting online services such as online banking, and fraud actors incorporating AI tools to enhance their fraud,” they said.

Cost of fraud

For every dollar lost to fraud in the U.S., the actual cost is $4.60, Jeff Scott, vice president of fraudtech at digital banking service provider Q2, told BAN. That number is the result of analysis in LexisNexis’ annual April fraud report, based on the responses of 569 risk executives surveyed in late 2024 and early 2025. While the $4.60 was flat from LexisNexis’ 2023 data, the cost per dollar in 2024 marked a 23% jump from $3.75 in 2022, before the generative AI boom, according to the study.

Similarly, synthetic identity fraud, estimated to cost financial institutions $6 billion last year, could reach $23 billion in losses by 2030, Deloitte’s Lalchand said.

“The average payoff of a single synthetic ID is estimated to be between $81,000 and $98,000, but a single attack can sometimes result in the theft of several millions [of dollars].”

— Satish Lalchand, principal, Deloitte Transactions and Business Analytics

Fraud by the numbers

Top threats

Deloitte is seeing fraudsters exploit AI and gen AI tools for:

- Synthetic ID: Fraudster creates a fabricated identity or borrows aspects of a legitimate identity (e.g., name, address) to create an account;

- Account takeover: Fraudster takes control of an account through phishing or credential theft;

- Deep fake: Fraudster uses AI to create realistic fake videos or audio recordings to impersonate others or deceive individuals;

- Bank impersonation: Fraudster mimics a bank official to trick the victim into sending funds to an account controlled by the fraudster;

- Business email compromise: Fraudster sends an email that appears to come from a known source (e.g., supplier) to trick the victim;

- Vishing: Fraudster uses phone calls or voice messages to manipulate individuals into disclosing sensitive information.

AI-powered deepfakes are particularly dangerous.

“AI can generate realistic-looking fake IDs, invoices, contracts and other documents, making it easier to bypass verification processes or deceive victims,” the spokesperson said.

Additionally, AI-generated videos can be used to impersonate executives and other authority figures, tricking employees into transferring funds or revealing sensitive information.

Fighting AI with AI

On the other hand, AI can also help FIs mitigate financial fraud, Deloitte’s Lalchand said. During the past decade, FIs have adopted numerous technology tools to support analysis of digital signals and collection of third-party consortium data.

“Now, some organizations are looking to aggregate data across these systems to obtain a 360-degree view of the customer and prioritize among those signals. AI is well-suited to respond to that need,” he said.

In fact, 90% of FIs have incorporated AI into their fraud management strategies, according to data science firm Feedzai’s 2025 AI Trends in Fraud and Financial Crime Prevention, which surveyed 562 financial services industry leaders in March and April.

AI is being used in fraud detection for:

- Anomaly detection and pattern recognition, including within real-time monitoring;

(Courtesy/Canva) - Fraudulent document detection, including the use of fraudulent documents like passports, used in identity verification;

- Voice and speech analysis, including deepfake/synthetic voice detection;

- User behavior analysis;

- Phishing detection;

- Synthetic data creation for training models; and

- Collecting and summarizing information used by investigators.

Risk factors

Digital banks, like neobanks and fintechs, are at greater risk for identity theft, synthetic IDs and deepfake-driven account takeovers due to their reliance on electronic authentication, Lalchand said. To counter this, they’re adopting advanced tools like:

- AI-based document checks;

- Biometrics; and

- In-app verification.

These institutions often use integrated fraud platforms that assess risk across multiple channels and touchpoints in real time – a strategy the FBI supports. Traditional banks are now following suit, he said.

“The rise in online banking has also enabled fraud actors to open new bank accounts entirely online, making bust-out schemes lower risk for the individuals opening the accounts,” the FBI spokesperson said, echoing Lalchand’s statements.

The shift to digital banking, particularly during and after the pandemic, has made smaller banks and credit unions more vulnerable, as they lack the budget for sophisticated defenses, added Q2’s Scott.

However, AI-powered fraud management models are becoming more accessible across institutions of all sizes.

“In some cases, mid-size and smaller financial institutions can combat fraud faster than larger banks due to simpler systems and faster integration,” Scott said.

Finally, cryptocurrency also presents a new avenue for bad actors to anonymously and quickly transfer funds across country borders, making it difficult for victims to recover losses, according to the FBI spokesperson.

FIs, fraudsters at war

Macroeconomic uncertainty also presents a growing opportunity for fraudsters, Roy Zur, co-founder and chief executive at AI-powered security platform Charm Security, told BAN.

“The craziness in the markets, this is where scammers thrive,” Zur said. “They exploit chaos and confusion, offering misleading opportunities just when people are most anxious about their finances.”

— Roy Zur, co-founder and CEO, Charm Security

FIs have no choice but to step up communication with customers and adopt AI-powered tools to stop fraud before it happens, Zur said.

Founded in 2024, Charm raised $8 million in March in a seed round led by venture capital firm Team8. The startup uses AI to assess consumer behavior so FIs can proactively protect consumers from scams they’re most susceptible to, Zur said.

AI is critical for staying ahead of evolving threats in an increasingly digital banking landscape, said Lalchand, agreeing with Zur.

“As organizations collect various signals and data points throughout the customer journey, AI can help consolidate, prioritize and explain why certain behaviors may or may not be fraudulent,” he said.

Editor’s note: This is the first installment of a three-part series and originally appeared in Bank Automation News, an Equipment Finance News sister publication.